Deep Dive - Test Engine Storage State Security

One of the key elements of automated discussion using the multiple profiles of automated testing of Power apps is the security model to allow login and the security around these credentials. Understanding this deep dive is critical to comprehend how the login credential process works, how login tokens are encrypted, and how this relates to the Multi-Factor Authentication (MFA) process. Additionally, we will explore the controls that the Entra security team can put in in place and the security model across Test Engine, Playwright, Data Protection API, OAuth Login to Dataverse, Dataverse, and Key Value Store.

This section is intended for discussion and review purposes. Each organization should thoroughly review and determine how this information fits into their specific security and compliance processes. The details provided here are meant to offer a comprehensive understanding of the security model for automated testing of Power apps, including login credentials, token encryption, and Multi-Factor Authentication (MFA). It is crucial for each organization to assess and adapt these insights to align with their unique security requirements and protocols.

Security Context

This discussion fits into wider Secure First Initiative landscape which is a comprehensive approach to ensuring that security is embedded in every aspect of our solutions. This initiative is built on three core principles: Secure By Design, Secure By Default, and Secure in Operations. By integrating these principles, we aim to create robust, resilient, and secure applications that can withstand evolving cyber threats.

Security Principles

In today’s digital landscape, security is paramount. The Secure First Initiative (SFI) emphasizes a proactive approach to security, ensuring that every layer of our solutions is fortified against potential threats. This involves a culture of continuous improvement, robust governance, and adherence to best practices across all stages of development and operations. Let’s look at the key principles.

Secure By Design

Security is a fundamental aspect of our design process. We build security-first tests to ensure that our solutions are inherently secure from the outset. Looking specifically at the power platform through the lens of the Power Apps Test Engine, this includes:

- Building on Security concepts in Microsoft Dataverse

- User Profiles: Allow support for multiple profiles to verify how the solution reacts when an application is not shared.

Secure By Default

The aim of solutions come with security protections enabled and enforced by default. This means:

- Default Security Settings: Ensuring that security settings are enabled by default, requiring no additional configuration.

- Automated Testing: Using the Power Apps Test Engine to create and verify tests as part of the build and release process.

- Least Privilege Principle: Allowing default roles and permissions to follow the principle of least privilege, and testing that minimizes access rights for users to the bare minimum necessary.

Secure in Operations

Continuous security validation and verification are crucial to maintaining a secure operational environment. This involves:

- Continuous Integration and Deployment (CI/CD): Ability to create an share test profiles inside CI/CD pipelines to continuously validate and verify security configurations.

- Multi-Factor Authentication (MFA) and Conditional Access: Ensuring that solutions work seamlessly with MFA and Conditional Access policies.

End to End Process

With that context in place lets explore the possible end to end process at a high level.

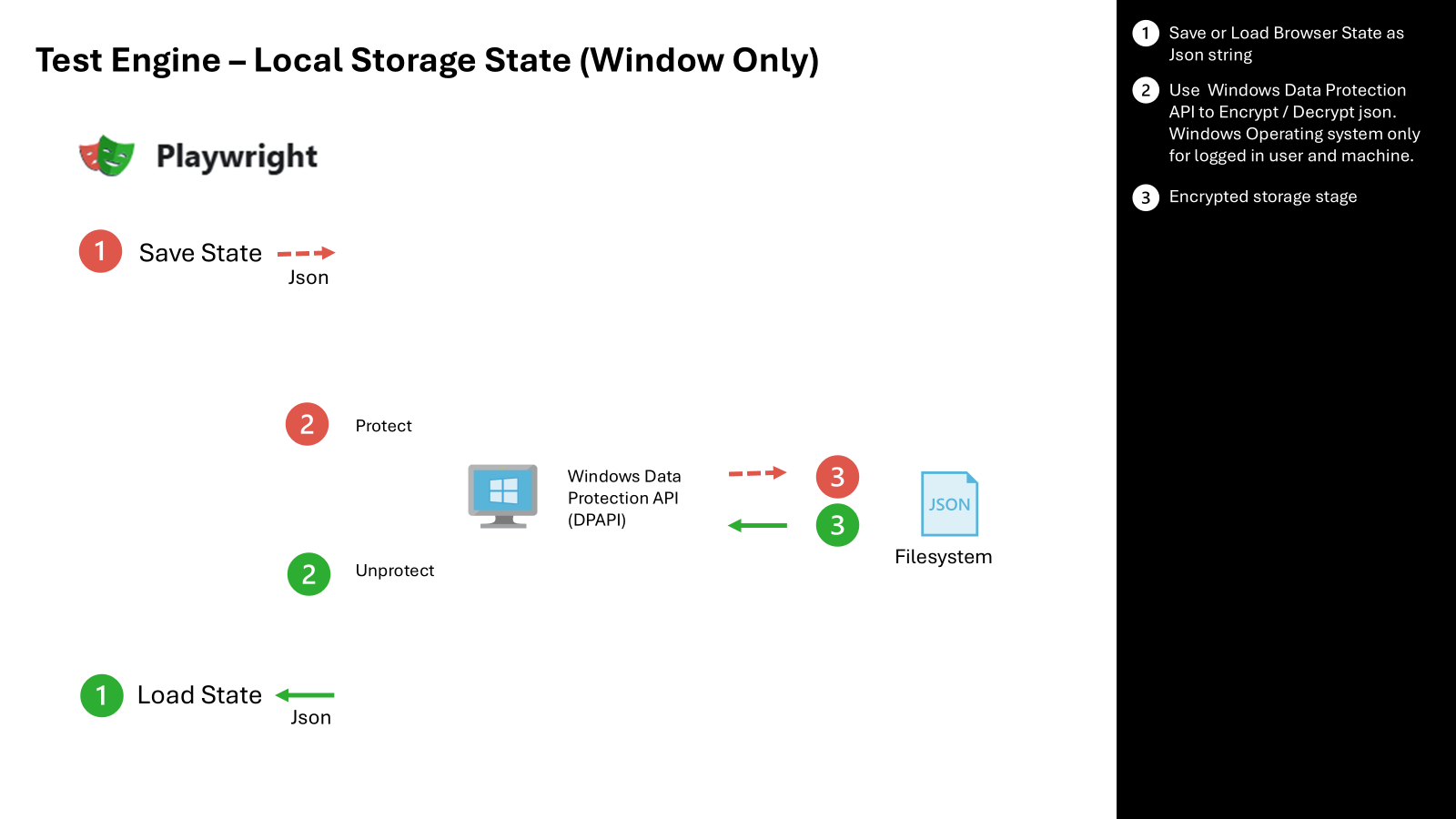

Local Windows PC

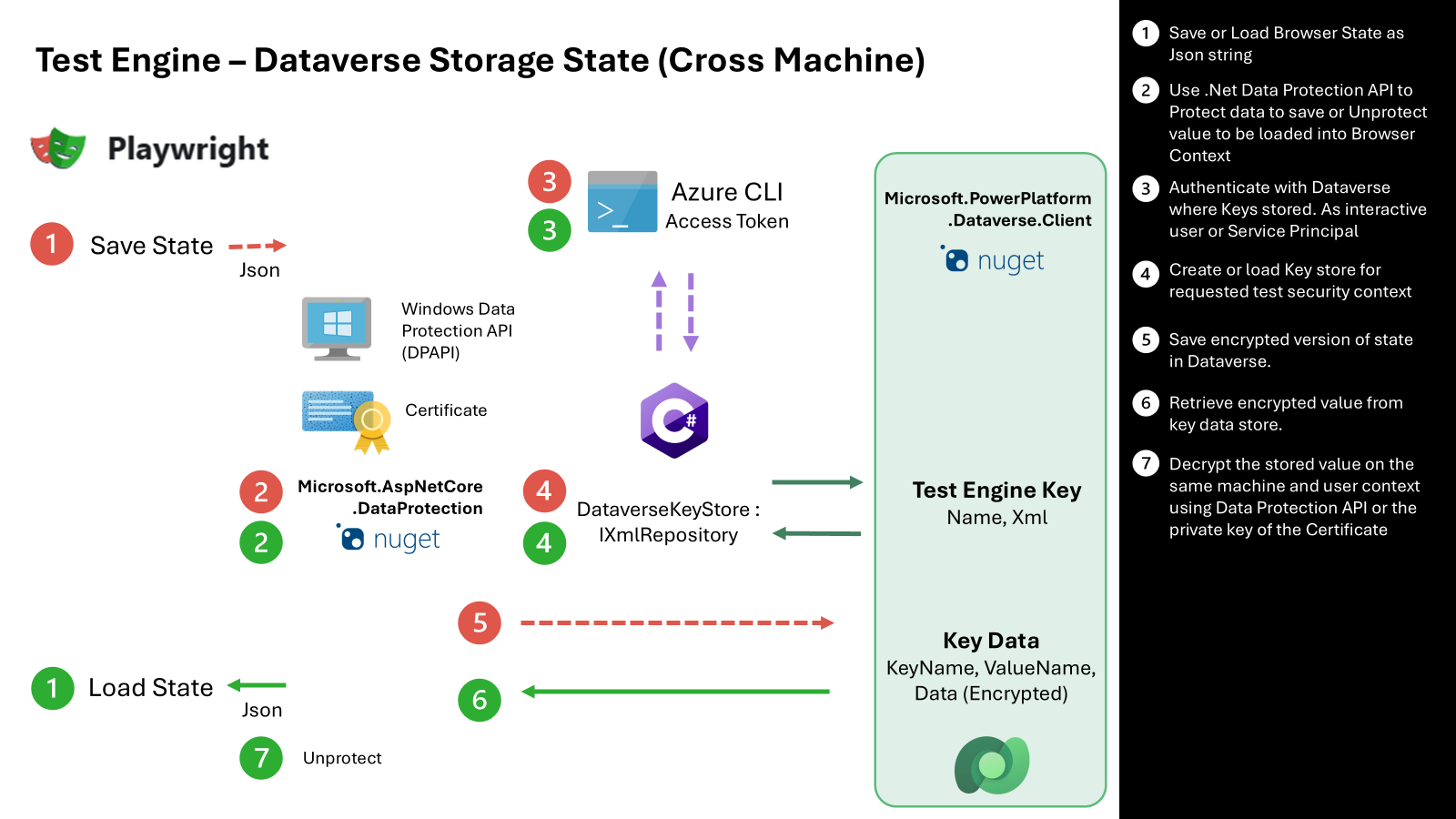

Data Protection with Dataverse

Local Windows

The first and simplest option to secure storage state is using Windows Data Protection API. While this option has less components it does have limitations as it requires Microsoft Windows together the user account and machine to secure the files locally. If you are looking to execute on a different operating system or execute your tests in the context of pipelines the Dataverse Data Protection approach will be a better approach.

- The current storage state user authentication provider is extended to Save and load the Playwright browser context as Encrypted values

- Make use of the Windows Data Projection API to protect and unprotect the saved state at rest.

- Save encrypted json file or retrieve un protected JSON to the file system for use in other test sessions

Data Protection Dataverse

The next approach makes use of a more comprehensive data protection approach that allows saved user persona state to be shared across multiple machines. This approach adopts a multi level approach to allow for a wider set of operating systems and execution environments.

- The current storage state user authentication provider is extended to Save and load the Playwright browser context as Encrypted values

- Make use of the Microsoft.AspNetCore.DataProtection package to offer Windows Data Protection (DAPI) or Certificate public / private encryption

- Use the current logged in Azure CLI session to obtain an access token to the Dataverse instance where Test Engine Key values and encrypted key data is stored

- Use a custom xml repository that provides the ability query and create Data Protection state by implementing IXmlRepository

- Store XML state of data protection in Dataverse Table. Encryption of XML State managed by Data Protection API and selected protection providers

- Make use of Dataverse Security model, sharing and auditing features are enabled to control access and record access to key and key data. Data Protection API is used to decrypt values and apply the state json to other test sessions.

- Use the Data Protection API to decrypt the encrypted value using Windows Data Protection API (DAPI) or X.509 certificate private key.

- A future option could also consider adding integration with Azure Key Vault

Tell Me More

Lets explore each of these layers in more detail.

Playwright

Test Engine web based tests encapsulate browser automation using Playwright. The key class in this process is BrowserContext that allows provide a way to operate multiple independent browser sessions. Specifically the key element is the use of storage state for this browser context, contains current cookies and local storage snapshot to allow interactive and headless login.

Initial Login

Using the storage state provider of Test Engine the initial login must be part of an interactive login process. This process will attempt to start a test session. When no previous storage state is available or valid the interactive user will be prompted to be logged in via Entra before allowing access to the requested page. This login process will use what ever Multi Factor and Conditional Access policies that have been applied to validate the user login.

Including some further on possible settings for background reading:

- Configured Authentication Methods

- How it works: Microsoft Entra multifactor authentication

- Reauthentication prompts and session lifetime for Microsoft Entra multifactor authentication

- Web browser cookies used in Microsoft Entra authentication

- What is Conditional Access?

- Configure adaptive session lifetime policies

- Require a compliant device, Microsoft Entra hybrid joined device, or multifactor authentication for all users

- Microsoft Defender for Cloud Apps overview

The goal of the login process it work with organization defined login process so that defined authentication methods are validated.

Storage State

After a successful login process The storage state of the browser context can contain cookies that are used to authenticate later sessions.

The How to: Use Data Protection provides more information and details on this approach.

Windows Data Protection API

.NET provides access to the data protection API (DPAPI), which allows you to encrypt data using information from the current user account or computer. When you use the DPAPI, you alleviate the difficult problem of explicitly generating and storing a cryptographic key.

Data Protection API

The Microsoft.AspNetCore.DataProtection package offers Windows Data Protection (DAPI) or Certificate public/private encryption. This ensures that sensitive data, such as login tokens, is securely encrypted and stored.

Key information to review for readers that are unfamiliar with Data Protection API:

- Authenticated encryption details in ASP.NET Core with ES-256-CBC + HMACSHA256

- Key management in ASP.NET Core

- Custom key repository

- Windows DPAPI key encryption at rest encryption mechanism for data that’s never read outside of the current machine. Only applies to Windows deployments.

- X.509 certificate key encryption at rest

Encryption

By default data values are encrypted with ES-256-CBC and HMACSHA256.

ES-256-CBC stands for “AES-256 in Cipher Block Chaining (CBC) mode.” AES (Advanced Encryption Standard) is a widely used encryption algorithm that ensures data confidentiality. The “256” refers to the key size, which is 256 bits. CBC mode is a method of encrypting data in blocks, where each block of plaintext is XORed with the previous ciphertext block before being encrypted. This ensures that identical plaintext blocks will produce different ciphertext blocks, enhancing security.

HMACSHA256 stands for “Hash-based Message Authentication Code using SHA-256.” HMAC is a mechanism that provides data integrity and authenticity by combining a cryptographic hash function (in this case, SHA-256) with a secret key. SHA-256 is a member of the SHA-2 family of cryptographic hash functions, producing a 256-bit hash value.

When combined, ES-256-CBC + HMACSHA256 provides both encryption and authentication:

- Encryption with AES-256-CBC: The data is encrypted using AES-256 in CBC mode, ensuring that the data is confidential and cannot be read by unauthorized parties.

- Authentication with HMACSHA256: An HMAC is generated using SHA-256 and a secret key. This HMAC is appended to the encrypted data. When the data is received, the HMAC can be recalculated and compared to the appended HMAC to verify that the data has not been tampered with.

This combination ensures that the data is both encrypted (confidential) and authenticated (integrity and authenticity), providing a robust security mechanism.

Dataverse Integration

The current logged-in Azure CLI session is used to obtain an access token to the Dataverse instance where Test Engine key values and encrypted key data are stored. The Dataverse security model, sharing, and auditing features are enabled to control access and record access to key and key data.

Further reading for readers unfamiliar with Azure CLI Login, Access Token and Dataverse security model:

- az login

- az account get-access-token using Azure CLI to obtain access token. In this case it is used to obtain access token to integrate with Dataverse custom XML repository

- Microsoft.PowerPlatform.Dataverse.Client used to access Custom XML Repository using the obtained access token

- Security concepts in Microsoft Dataverse where using User or Team owned records.

- Granting permission to tables in Dataverse for Microsoft Teams

- Record sharing Individual records can be shared on a one-by-one basis with another user (interactive or application user).

- Column-level security in Dataverse

- System and application users. Specifically application users that could be used from the context of a CI/CD process.

Custom XML Repository

A custom XML repository provides the ability to query and create Data Protection state by implementing IXmlRepository. The XML state of data protection is stored in a Dataverse table, with encryption managed by the Data Protection API and selected protection providers.

Note the IXmlRepository don’t need to parse the XML passing through them. They treat the XML documents as opaque and let higher layers of the Data Protection API worry about generating and parsing the documents.

Decryption Process

The Data Protection API is used to decrypt the encrypted value using Windows Data Protection API (DAPI) or X509 certificate private key. This decrypted value is then applied to other test sessions.

Sample

The following sample is a conceptual overview of how values are Protected and Unprotected using Dataverse as the IXmlRepository

using Microsoft.AspNetCore.DataProtection;

using Microsoft.AspNetCore.DataProtection.Repositories;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

using Microsoft.PowerPlatform.Dataverse.Client;

using Microsoft.Xrm.Sdk;

using Microsoft.Xrm.Sdk.Query;

using System.Security.Cryptography.X509Certificates;

using System.Xml.Linq;

public class Program {

public static void Main(string[] args)

{

ServiceProvider services = null;

var serviceCollection = new ServiceCollection();

serviceCollection.AddLogging(configure => configure.AddConsole());

var api = new Uri("https://contoso.crm.dynamics.com/");

string keyName = "Sample";

// Configure Dataverse connection

var serviceClient = new ServiceClient(api, (url) => Task.FromResult(AzureCliHelper.GetAccessToken(api)));

serviceCollection.AddSingleton<IOrganizationService>(serviceClient);

serviceCollection.AddDataProtection()

.ProtectKeysWithCertificate(GetCertificateFromStore("localhost"))

//.ProtectKeysWithDpapi()

.AddKeyManagementOptions(options =>

{

options.XmlRepository = new DataverseKeyStore(services?.GetRequiredService<ILogger<Program>>(), serviceClient, keyName);

});

services = serviceCollection.BuildServiceProvider();

var protector = services.GetDataProtector("ASP Data Protection");

string valueName = string.Empty;

while ( string.IsNullOrEmpty(valueName))

{

Console.WriteLine("Variable Name");

valueName = Console.ReadLine();

}

var matches = FindMatch(serviceClient, keyName, valueName);

if (matches.Count() == 0)

{

string newValue = string.Empty;

while ( string.IsNullOrEmpty(newValue))

{

Console.WriteLine("Value does not exist. What would you like the value to be?");

newValue = Console.ReadLine();

}

StoreValue(serviceClient, keyName, valueName, protector.Protect(newValue));

Console.WriteLine($"Saved value for {valueName}");

}

else

{

string data = protector.Unprotect(matches.First().Data);

Console.WriteLine($"Value {valueName}: {data}");

}

Console.ReadLine();

}

}

DataverseKeyStore

Possible code to query and store encryption key values encrypted via DAPI or public key of certificate. This layer just passes the opaque xml to other components interact with.

public class DataverseKeyStore : IXmlRepository

{

private readonly ILogger<Program>? _logger;

private readonly IOrganizationService _service;

private string _friendlyName;

public DataverseKeyStore(ILogger<Program>? logger, IOrganizationService organizationService, string friendlyName)

{

_logger = logger;

_service = organizationService;

_friendlyName = friendlyName;

}

public IReadOnlyCollection<XElement> GetAllElements()

{

// Retrieve keys from Dataverse

var query = new QueryExpression("te_key")

{

ColumnSet = new ColumnSet("te_xml"),

Criteria = new FilterExpression

{

Conditions =

{

new ConditionExpression("te_name", ConditionOperator.Equal, _friendlyName)

}

}

};

var keys = _service.RetrieveMultiple(query)

.Entities

.Select(e => XElement.Parse(e.GetAttributeValue<string>("te_xml")))

.ToList();

return keys.AsReadOnly();

}

public void StoreElement(XElement element, string friendlyName)

{

var keyEntity = new Entity("te_key")

{

["te_name"] = _friendlyName,

["te_xml"] = element.ToString(SaveOptions.DisableFormatting)

};

_service.Create(keyEntity);

}

}

Saving and Locating Encrypted Data

The following gives a simple view of possible code to store and retreive encrypted values from dataverse

public static IReadOnlyCollection<ProtectedKeyValue> FindMatch(IOrganizationService service, string keyName, string? valueName)

{

// Retrieve keys from Dataverse

FilterExpression filter = new FilterExpression(LogicalOperator.And);

filter.Conditions.Add(new ConditionExpression("te_keyname", ConditionOperator.Equal, keyName));

filter.Conditions.Add(new ConditionExpression("te_valuename", ConditionOperator.Equal, valueName));

var query = new QueryExpression("te_keydata")

{

ColumnSet = new ColumnSet("te_keyname", "te_valuename", "te_data"),

Criteria = filter

};

var keys = service.RetrieveMultiple(query)

.Entities

.Select(e => new ProtectedKeyValue {

KeyId = e.Id.ToString(),

KeyName = e["te_keyname"]?.ToString(),

ValueName = e["te_valuename"]?.ToString(),

Data = e["te_data"]?.ToString(),

})

.ToList();

return keys.AsReadOnly();

}

public static void StoreValue(IOrganizationService service, string keyName, string valueName, string data)

{

var keyEntity = new Entity("te_keydata")

{

["te_keyname"] = keyName,

["te_valuename"] = valueName,

["te_data"] = data,

};

service.Create(keyEntity);

}